AMD Takes Major Steps to Make AI Compute More Efficient, Achieves 97% Reduction in Energy Consumption Compared to Systems from Five Years Ago

AMD now plans to make rack-scale AI clusters much more energy efficient, as Team Red intends to deliver a 20x increase in efficiency by 2030, making computation more scalable.

AMD To Now Ensure That AI Doesn’t Take Up Much Power By Delivering Exceptional Efficiency Figures

[Press Release]: At AMD, energy efficiency has long been a guiding core design principle aligned to our roadmap and product strategy. For more than a decade, we’ve set public, time-bound goals to dramatically increase the energy efficiency of our products and have consistently met and exceeded those targets. Today, I’m proud to share that we’ve done it again, and we’re setting the next five-year vision for energy efficient design.

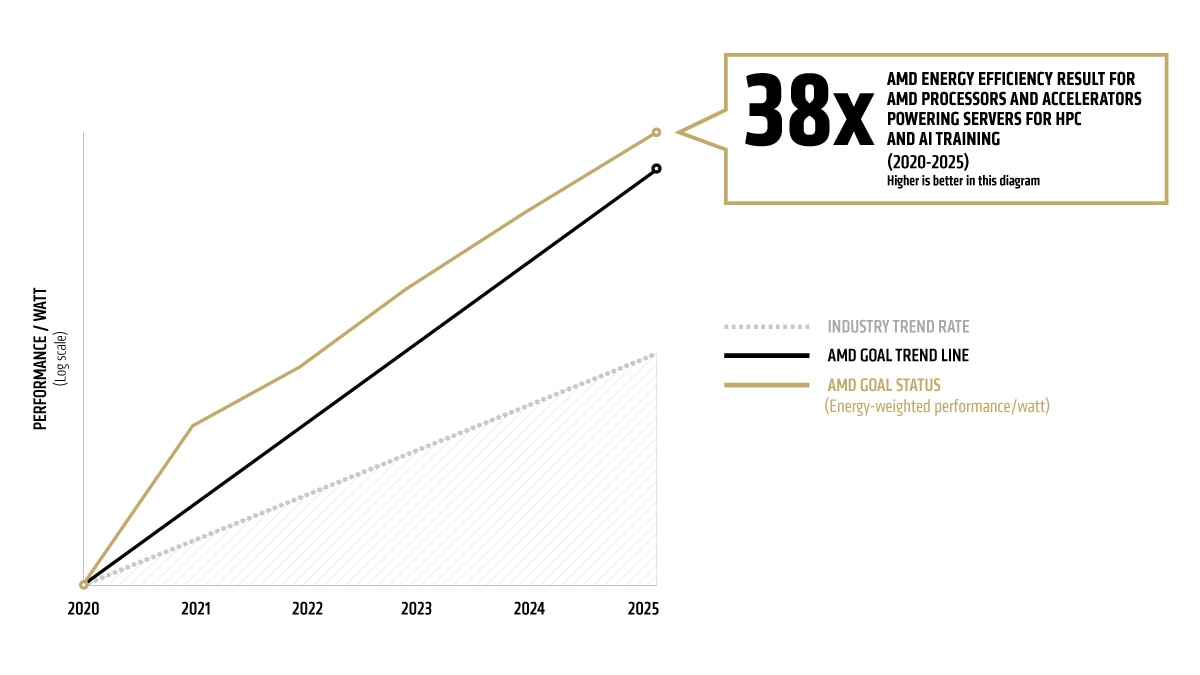

Today at Advancing AI, we announced that AMD has surpassed our 30×25 goal, which we set in 2021 to improve the energy efficiency of AI-training and high-performance computing (HPC) nodes by 30x from 2020 to 2025. This was an ambitious goal, and we’re proud to have exceeded it, but we’re not stopping here.

As AI continues to scale, and as we move toward true end-to-end design of full AI systems, it’s more important than ever for us to continue our leadership in energy-efficient design work. That’s why today, we’re also setting our sights on a bold new target: a 20x improvement in rack-scale energy efficiency for AI training and inference by 2030, from a 2024 base year.

A New Goal for the AI Era

As workloads scale and demand continues to rise, node-level efficiency gains won’t keep pace. The most significant efficiency impact can be realized at the system level, where our 2030 goal is focused.

We believe we can achieve 20x increase in rack-scale energy efficiency for AI training and inference from 2024 by 2030, which AMD estimates exceeds the industry improvement trend from 2018 to 2025 by almost 3x. This reflects performance-per-watt improvements across the entire rack, including CPUs, GPUs, memory, networking, storage and hardware-software co-design, based on our latest designs and roadmap projections. This shift from node to rack is made possible by our rapidly evolving end-to-end AI strategy and is key to scaling datacenter AI in a more sustainable way.

What This Means in Practice

A 20x rack-scale efficiency improvement at nearly 3x the prior industry rate has major implications. Using training for a typical AI model in 2025 as a benchmark, the gains could enable:5

- Rack consolidation from more than 275 racks to <1 fully utilized rack

- More than a 95% reduction in operational electricity use

- Carbon emission reduction from approximately 3,000 to 100 metric tCO2 for model training

We’re excited to keep pushing the limits, not just of performance, but also what’s possible when efficiency leads the way. As the goal progresses, we will continue to share updates on our progress and the effects these gains are enabling across the ecosystem.